MCPs: THE AI BUILDING BLOCKS

HOW MCPs CAN ACCELERATE AI-POWERED INNOVATION IN REAL-WORLD DATA

In life sciences, the pressure to move fast — while ensuring your analytics are compliant, accurate, and scientifically rigorous — has never been greater. The rise of Large Language Models (LLMs), commonly referred to just as “AI”, opens up powerful new possibilities to unlock value from real-world data (RWD). But trying to develop and design an LLM as one giant monolith is like trying to run a clinical trial on the back of a napkin — messy, error-prone, and impossible to scale.

Luckily there are solutions we can use. Enter Modular Component Pipelines (MCPs) — the architecture pattern that’s becoming the gold standard for building scalable, flexible, and explainable AI systems in life sciences. MCPs let you break down typically complex and intricate AI pipelines into composable building blocks that can be tuned, swapped, or reused — just like have a Lego set for your LLM strategy.

Let’s dive into what MCPs are, how they help, and how your team can start using them today.

WHAT ARE MODULAR COMPONENT PIPELINES (MCPs)?

Modular Component Pipelines are structured workflows composed of discrete, reusable, and interoperable AI components. Each module in the pipeline handles a specific task — like data cleaning, enrichment, prompt creation, model inference, or output validation.

Think of MCPs as an assembly line for your LLM — instead of one massive system trying to do everything, you get an orchestrated sequence of micro-capabilities, each purpose-built and independently improvable.

This modular approach brings three core benefits:

Agility: Swap out components without refactoring your whole pipeline.

Transparency: Easier to inspect and validate individual steps for compliance or QA.

Reusability: Standard modules can be shared across projects, trials, or therapeutic areas.

HOW MCPs SUPERCHARGE LLM WORKFLOWS

LLMs are often treated like magic black boxes — give them a prompt, get a response. But behind any enterprise-grade LLM system lies a pipeline of supporting infrastructure, logic, and controls. MCPs formalize that workflow.

Here’s how MCPs elevate your LLM stack:

Data Preprocessing Modules: Standardize, clean, de-identify, and normalize RWD before it hits the model.

Semantic Mapping Components: Enrich unstructured text with clinical ontologies or concepts (e.g. SNOMED, RxNorm).

Prompt Engineering Blocks: Dynamically assemble prompts using structured patient or population data.

Inference Engines: Route tasks to the right model (e.g., retrieval-augmented, fine-tuned, or base LLMs).

Output Validation Steps: Apply business logic, PII checks, or human-in-the-loop (HITL) reviews.

Logging & Traceability: Track every step for regulatory transparency and reproducibility.

With MCPs, you’re not just building an LLM app — you’re engineering an LLM system, the kind that meets the standards of science, strategy, and scrutiny.

🧬 COMMON MCP MODULES FOR LIFE SCIENCES PIPELINES

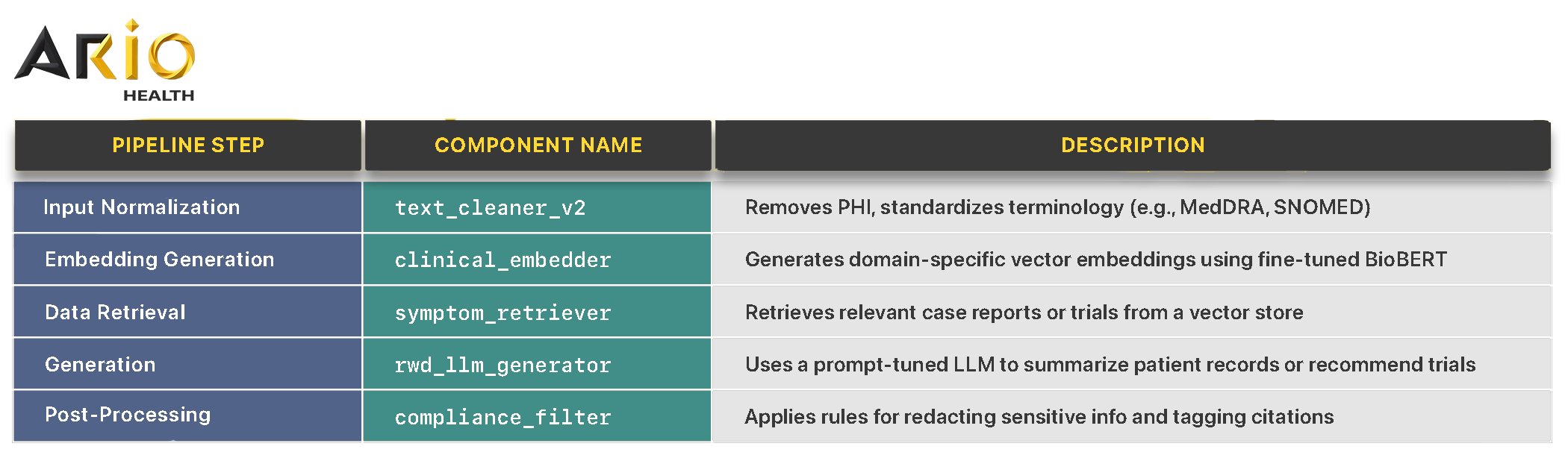

Here’s a high-level map of components that are commonly assembled into LLM pipelines for life sciences companies:

| MODULE | COMMON USE |

|---|---|

| Data Ingestion | Claims, EHR, labs, sensors, registries, trials |

| Normalization | Standardize terms (ICD, LOINC, MedDRA, RxNorm) |

| Temporal Sequencing | Order patient events to generate timelines |

| Contextual Prompting | Build queries that include structured patient context |

| LLM Routing | Decide whether to use GPT-4, fine-tuned models, etc. |

| Validation & Safety | Flag hallucinations, incorrect answers, PIl leaks |

| Feedback Loops | Log outcomes and tune prompt logic or model weights |

🔬 Example in Life Sciences LLM Setup

WHY MCPs SHOULD BE YOUR AI ARCHITECTURE OF CHOICE

Life sciences companies can’t afford fragile, ad-hoc LLM solutions. The stakes are too high: compliance, patient outcomes, commercialization timelines. MCPs offer a practical foundation for scaling LLMs across domains while ensuring:

Governance-ready structure

Component-level explainability

Faster experimentation cycles

Future-proof extensibility

At Ario Health, we help pharma and biotech organizations build MCP-powered LLM systems from the ground up — or optimize what’s already in place. From designing modular components to deploying secure pipelines, we bring the architecture expertise life sciences demands.

REAL-WORLD USE CASES FOR MCPs

Modular Component Pipelines unlock high-impact AI use cases that are otherwise too complex to manage in one go. Here are four of the most valuable:

1. Automated Patient Timeline Generation

Building longitudinal views from fragmented RWD requires heavy lifting — parsing dates, normalizing codes, sequencing events. MCPs let you assemble components to do each task cleanly, feeding the timeline into an LLM for interpretation.

“A pharma company creates patient journeys for oncology cohorts to support clinical trial protocol design.”

2. Intelligent Cohort Discovery

MCPs allow structured filtering of RWD using multiple models and logic layers — from phenotype classifiers to trial inclusion criteria. The final result? Prompt-assembled cohorts with transparent provenance.

“A biotech uses modular pipelines to identify rare disease patients across EHR and claims, combining NLP diagnosis extraction with structured lab data filtering.”

3. Automated Literature Surveillance

Instead of relying on a single LLM to monitor publications, an MCP pipeline can:

Fetch PubMed entries,

Classify relevance,

Summarize findings,

Validate outputs with rules or experts.

“A medtech company’s regulatory team uses MCPs to track safety signals and therapeutic competition through regular searches and evaluations of scientific journal repositories””

4. Clinical Trial Matching at Scale

Rather than a rigid rules-based engine, an MCP approach enables layered matching: a preprocessing component extracts patient data, a mapper aligns it to trial protocols, and a model interprets trial eligibility.

“A decentralized trial platform uses MCPs to match tens of thousands of patients to appropriate trial arms weekly.”

TL;DR:

MCPs MAKE LLMs WORK FOR LIFE SCIENCES

If you’re working with LLMs in a life sciences setting, building without MCPs is like coding without Git or trying to manage a trial without protocol templates.

Modular Component Pipelines turn LLMs from science projects into enterprise systems. They let you:

Scale safely

Innovate faster

Stay compliant

Let’s build your future, one clean, reusable module at a time.

NEXT STEPS: FROM STRATEGY TO EXECUTION

Want to see what MCPs would look like for your data and workflows?

Schedule a consultation with us at ario.health — and we’ll help you map your first pipeline. At Ario Health, we specialize in helping life sciences organizations move from idea to implementation. Whether you’re validating a use case, integrating with complex data, or operationalizing MLOps and governance, we’re here to accelerate your journey with the right frameworks and hands-on support.

Ario Health brings deep expertise in life sciences, real-world data, and AI implementation.

MORE FROM ARTIFICIALLY REAL:

CATEGORIES

TAGS

. . .

RECENT ARTICLES

-

September 2025

- Sep 18, 2025 AI IN USE: Data Linking For Patient Journeys Sep 18, 2025

-

August 2025

- Aug 18, 2025 AI IN USE: Patient Journey Tracking Aug 18, 2025

- Aug 12, 2025 Survey Finds Medtechs Lack Confidence in Regulatory Data Quality Aug 12, 2025

- Aug 6, 2025 MCPs: THE AI BUILDING BLOCKS Aug 6, 2025

-

July 2025

- Jul 23, 2025 BUILDING SMARTER LIFE SCIENCES LLMs: A Summary! Jul 23, 2025

-

June 2025

- Jun 26, 2025 BUILDING SMARTER LIFE SCIENCES LLMs: Ensuring Compliance and Data Privacy Jun 26, 2025

- Jun 25, 2025 BUILDING SMARTER LIFE SCIENCES LLMs: Solution Training and Management Jun 25, 2025

- Jun 18, 2025 BUILDING LIFE SCIENCES LLMs: Data Access and Integration Jun 18, 2025

- Jun 11, 2025 BUILDING LIFE SCIENCES LLMs: Essential Solution Components Jun 11, 2025

- Jun 4, 2025 BUILDING LIFE SCIENCES LLMs: Critical Design and Architecture Decisions Jun 4, 2025

-

May 2025

- May 23, 2025 BUILDING SMARTER LIFE SCIENCES LLMS: A SERIES May 23, 2025

-

March 2025

- Mar 12, 2025 Interlinking Real World Data At Unprecedented Scale Mar 12, 2025

-

February 2025

- Feb 20, 2025 How AI is Expanding RWD for Clinical Trial Recruitment Feb 20, 2025

-

January 2025

- Jan 14, 2025 Innovative Ways AI is Changing Real-World Data Analysis Jan 14, 2025

-

November 2024

- Nov 7, 2024 Enhancing Real-World Data Analysis: How LLMs Enable Advance Data Linkage Nov 7, 2024

-

October 2024

- Oct 25, 2024 Generative-AI Transforms Healthcare Charting Oct 25, 2024

STAY CONNECTED