BUILDING SMARTER LIFE SCIENCES LLMs: Ensuring Compliance and Data Privacy

The excitement around speed, scale, and automation when you begin using large language models (LLMs) is well-deserved and anticipated. However, it can often eclipse the quieter—but mission-critical—concerns of compliance and privacy.

Despite the promise of LLMs, many life sciences organizations quickly encounter roadblocks when privacy and compliance enter the picture. Sensitive health data, global regulations, and audit-heavy environments mean that even the most promising LLM pilot can stall—or worse, backfire—if privacy and governance aren’t treated as first-class citizens. It’s all too easy to overexpose PII, lose traceability on outputs, or apply inconsistent safeguards across regions. Even what starts as a technical proof of concept can ultimately trigger an internal legal freeze.

The path to safe, scalable AI in life sciences isn’t just technical—it’s strategic, cross-functional, and rooted in trust-by-design. Avoiding these pitfalls requires embedding compliance and privacy into the core architecture and operations.

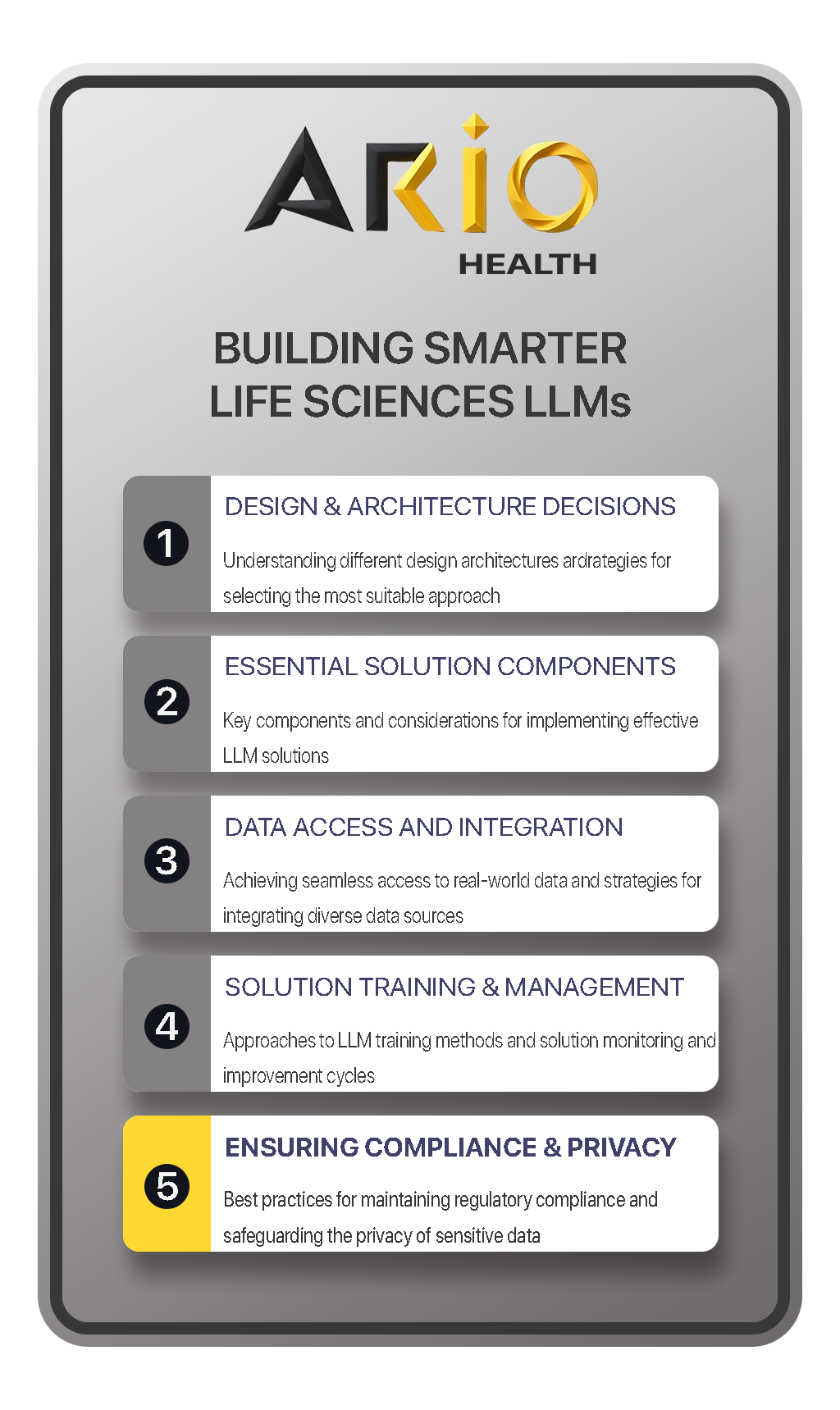

In this final article (Article 5) of our Building Smarter Life Sciences LLMs series, we shift the spotlight to what IT and strategy leaders must do to ensure that AI innovation happens safely, ethically, and within regulatory boundaries.

1. START WITH PRIVACY BY DESIGN

Privacy shouldn’t be retrofitted—it should be embedded from the first design sketch. In highly regulated industries like pharmaceuticals and healthcare, protecting patient data is a legal obligation and a trust imperative. Embedding privacy-by-design principles means creating technical and organizational measures that prevent PII exposure, limit data retention, and provide full transparency into how data is processed and stored. It's about making privacy an architectural priority, not an afterthought.

Best Practices:

Conduct Data Protection Impact Assessments (DPIAs) for all LLM initiatives

Minimize PII exposure using de-identification, pseudonymization, or synthetic data

Classify all data sources by sensitivity and access levels

Ario Health Tip:

Bake data classification and masking into your ingestion pipelines—automate privacy from the ground up.

“A drug safety team fine-tunes an LLM on de-identified safety reports to ensure pharmacovigilance workflows remain compliant with GDPR.”

|

PRO TIP

Align privacy design choices with business impact—flag high-risk workflows early so safeguards scale with exposure.

|

2. ENSURE REGULATORY ALIGNMENT ACROSS JURISDICTIONS

Life sciences firms operate across borders, and your LLM does too. Whether it's HIPAA in the U.S., GDPR in the EU, or local clinical trial transparency laws, your compliance framework must match the model’s global footprint. Jurisdictional requirements often affect not just data storage, but data processing, access control, and audit expectations. Regulatory misalignment can lead to severe legal and financial consequences, especially in high-risk domains like safety reporting or patient engagement.

Best Practices:

Align prompt design and model outputs with applicable regulatory language

Maintain traceability for audit purposes (e.g., prompt + output logs)

Use data residency controls to comply with region-specific laws

Ario Health Tip:

Build modular compliance layers—data locality, user access, logging—into your architecture to flex with jurisdictional needs.

“A global pharma company routes EU-sourced queries through a geo-fenced deployment of its LLM to comply with GDPR data processing requirements.”

|

PRO TIP

Maintain a compliance matrix mapping each component of your LLM stack to local and global regulatory requirements—it’s a powerful tool for audits and board reporting.

|

3. MONITOR FOR SENSITIVE DATA LEAKAGE

One of the biggest risks with LLMs is unintentional disclosure of sensitive or regulated information. Hallucinations are one problem—but leakage of real patient or trial data is another entirely. LLMs trained or prompted with sensitive data may inadvertently reproduce identifying information, particularly in open-ended or exploratory queries. Organizations must monitor outputs systematically and continuously, using automated detection tools and adversarial testing to catch both real and synthetic leaks.

Best Practices:

Use AI-powered redaction and PII detectors during model output generation

Monitor for hallucinated but plausible personal details in responses

Employ adversarial testing to stress-test privacy protections

Ario Health Tip:

Treat hallucination testing as a compliance function, not just a quality check.

“A clinical development team uses automated QA tools to flag LLM responses that mimic real patient names or addresses—preventing them from reaching end users.”

|

PRO TIP

Log all flagged outputs, even false positives—they can uncover vulnerabilities in your prompt engineering or data filtering strategies.

|

4. ESTABLISH ROLE-BASED ACCESS AND AUDIT TRAILS

Not all users need the same access to model capabilities or outputs. Just as with clinical data platforms, user roles and traceability must be part of your LLM governance. By creating role-based access tiers, organizations can limit who can query certain data, control exposure to sensitive information, and ensure that model-generated content aligns with user intent and responsibility. Audit logs should be immutable, reviewable, and tied to organizational identity systems.

Best Practices:

Implement tiered access controls for sensitive prompts and datasets

Maintain immutable logs of all inputs, outputs, and interactions

Use gated workflows for regulatory- or safety-impacting LLM tasks

Ario Health Tip:

Embed LLMs into your existing identity and access control systems—treat them like any other enterprise-critical tool.

“A regulatory affairs team uses a gated LLM assistant that requires peer review before generating responses for FDA submissions.”

|

PRO TIP

Automate alerting when access rules are violated or when users attempt unauthorized actions—this adds a real-time compliance shield.

|

5. VALIDATE AND DOCUMENT FOR AUDIT READINESS

In life sciences, governance isn’t optional. Solution training and management must meet the same standards you apply to clinical systems. Governance ensures not only auditability and compliance, but also clarity of roles, change control, and risk mitigation.

Best Practices:

Maintain documentation of all training and fine-tuning data sources

Record prompt templates and version histories

Validate outputs through regular peer review and benchmark testing

Ario Health Tip:

Build a “compliance layer” into your LLM stack that automatically logs and versions everything—no scrambling before audits.

“During an internal GxP audit, a biotech firm demonstrates versioned prompt logic, output snapshots, and review annotations—all captured within its LLM governance platform.”

|

PRO TIP

Don’t wait for audits—schedule internal “pre-audits” every quarter to uncover gaps and demonstrate continuous improvement.

|

Summary

As LLMs enter the enterprise mainstream, their success will increasingly be judged not just by what they can do—but by how responsibly they do it. In life sciences, that means meeting the highest bar for data privacy, traceability, and regulatory rigor.

By designing for compliance from day one, you’re not slowing innovation—you’re protecting it.

Want help building a safe and scalable LLM lifecycle?

Ario Health brings deep expertise in life sciences, real-world data, and AI implementation.

We help pharma and biotech teams design systems that are compliant by default—and continuously improving.

➤ Explore Our Services

READ MORE FROM ARTIFICIALLY REAL:

CATEGORIES

TAGS

. . .

RECENT ARTICLES

-

September 2025

- Sep 18, 2025 AI IN USE: Data Linking For Patient Journeys Sep 18, 2025

-

August 2025

- Aug 18, 2025 AI IN USE: Patient Journey Tracking Aug 18, 2025

- Aug 12, 2025 Survey Finds Medtechs Lack Confidence in Regulatory Data Quality Aug 12, 2025

- Aug 6, 2025 MCPs: THE AI BUILDING BLOCKS Aug 6, 2025

-

July 2025

- Jul 23, 2025 BUILDING SMARTER LIFE SCIENCES LLMs: A Summary! Jul 23, 2025

-

June 2025

- Jun 26, 2025 BUILDING SMARTER LIFE SCIENCES LLMs: Ensuring Compliance and Data Privacy Jun 26, 2025

- Jun 25, 2025 BUILDING SMARTER LIFE SCIENCES LLMs: Solution Training and Management Jun 25, 2025

- Jun 18, 2025 BUILDING LIFE SCIENCES LLMs: Data Access and Integration Jun 18, 2025

- Jun 11, 2025 BUILDING LIFE SCIENCES LLMs: Essential Solution Components Jun 11, 2025

- Jun 4, 2025 BUILDING LIFE SCIENCES LLMs: Critical Design and Architecture Decisions Jun 4, 2025

-

May 2025

- May 23, 2025 BUILDING SMARTER LIFE SCIENCES LLMS: A SERIES May 23, 2025

-

March 2025

- Mar 12, 2025 Interlinking Real World Data At Unprecedented Scale Mar 12, 2025

-

February 2025

- Feb 20, 2025 How AI is Expanding RWD for Clinical Trial Recruitment Feb 20, 2025

-

January 2025

- Jan 14, 2025 Innovative Ways AI is Changing Real-World Data Analysis Jan 14, 2025

-

November 2024

- Nov 7, 2024 Enhancing Real-World Data Analysis: How LLMs Enable Advance Data Linkage Nov 7, 2024

-

October 2024

- Oct 25, 2024 Generative-AI Transforms Healthcare Charting Oct 25, 2024

STAY CONNECTED